Recently, Baltimore County had its second Omnilert-triggered police response in under a month, this time at Parkville High School. The AI claimed it detected a possible gun. Students were relocated, police swept the building, and, again, no weapon was found.

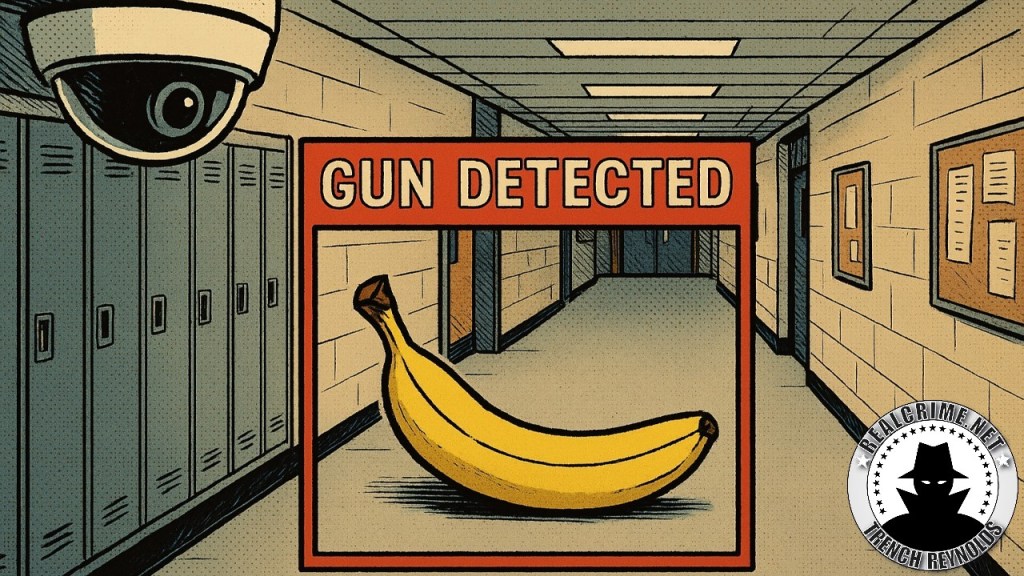

If it feels familiar, it’s because it is. Weeks earlier, Omnilert misidentified a bag of chips at Kenwood High as a gun, leading to a teenager being handcuffed at gunpoint. The 16-year-old later said he wondered if officers were going to kill him.

This is now a pattern, not an aberration.

WBAL-TV learned that Baltimore County school officials receive false positives from Omnilert every single day. Not occasionally. Not sometimes. Daily.

Yet the district only documents the big ones. The ones that blow up publicly, usually because police end up swarming a school over nothing.

Omnilert’s system funnels images and video to six different people who are supposed to verify potential weapons before police are dispatched. But the Kenwood and Parkville incidents show the reality: confusion, procedural breakdowns, and police responding before anyone knows what’s actually happening.

The school superintendent has said the district is reviewing the process. But no amount of retraining changes the fact that if your safety tool misfires every day, the problem isn’t “human error.” The problem is the tool.

Baltimore County isn’t the only place learning this the hard way.

At Antioch High School in Nashville, the same Omnilert system was online when a student opened fire in the cafeteria, killing one student and injuring two others. The system never detected the gun, even while it was being fired.

Nashville officials said the shooter’s position prevented activation. But that excuse only raises more questions.

If a system can’t detect a gun when it’s literally being used in a cafeteria shooting, what exactly is it good for?

Omnilert’s answer is always some variation of ‘It worked as intended.’ But “working as intended” shouldn’t mean missing a real gun while overreacting to a snack bag.

This is the part where we ask the obvious question.

Why are schools paying millions of dollars for a system that produces daily false alarms and failed to detect a real shooting?

The answer isn’t complicated.

It’s security theater.

School districts want to look like they’re doing something, anything, to reassure parents. AI gun detection sounds futuristic, decisive, and proactive. It looks great in a press release. It sounds comforting at school board meetings.

But it’s not keeping anyone safer.

What it is doing:

- Triggering police responses that put kids at risk.

- Creating unnecessary fear and disruption.

- Burning through taxpayer money.

- Treating ordinary objects as deadly weapons.

- Missing actual deadly weapons.

This technology is eating up millions of dollars that could be used for actual education, mental health support, hiring staff, reducing class sizes, or strengthening school counseling services. Any of which would contribute more to school safety than a camera guessing about snack bags.

Schools don’t need more gadgets. They need solutions that actually work.

(Sources)

- WBAL-TV: Omnilert alert led to police presence at Parkville High School

- Fox45 Baltimore: Police respond to Parkville High after Omnilert alert

- WBAL.com: Omnilert alert prompts search at Parkville High

- Patch: Students relocated after AI reported possible gun at Parkville

- WBAL-TV: BCPS gets daily false positives from Omnilert

- Fox45: Same AI gun detection software that missed school shooting, falsely flags Maryland student

Leave a comment